Overview

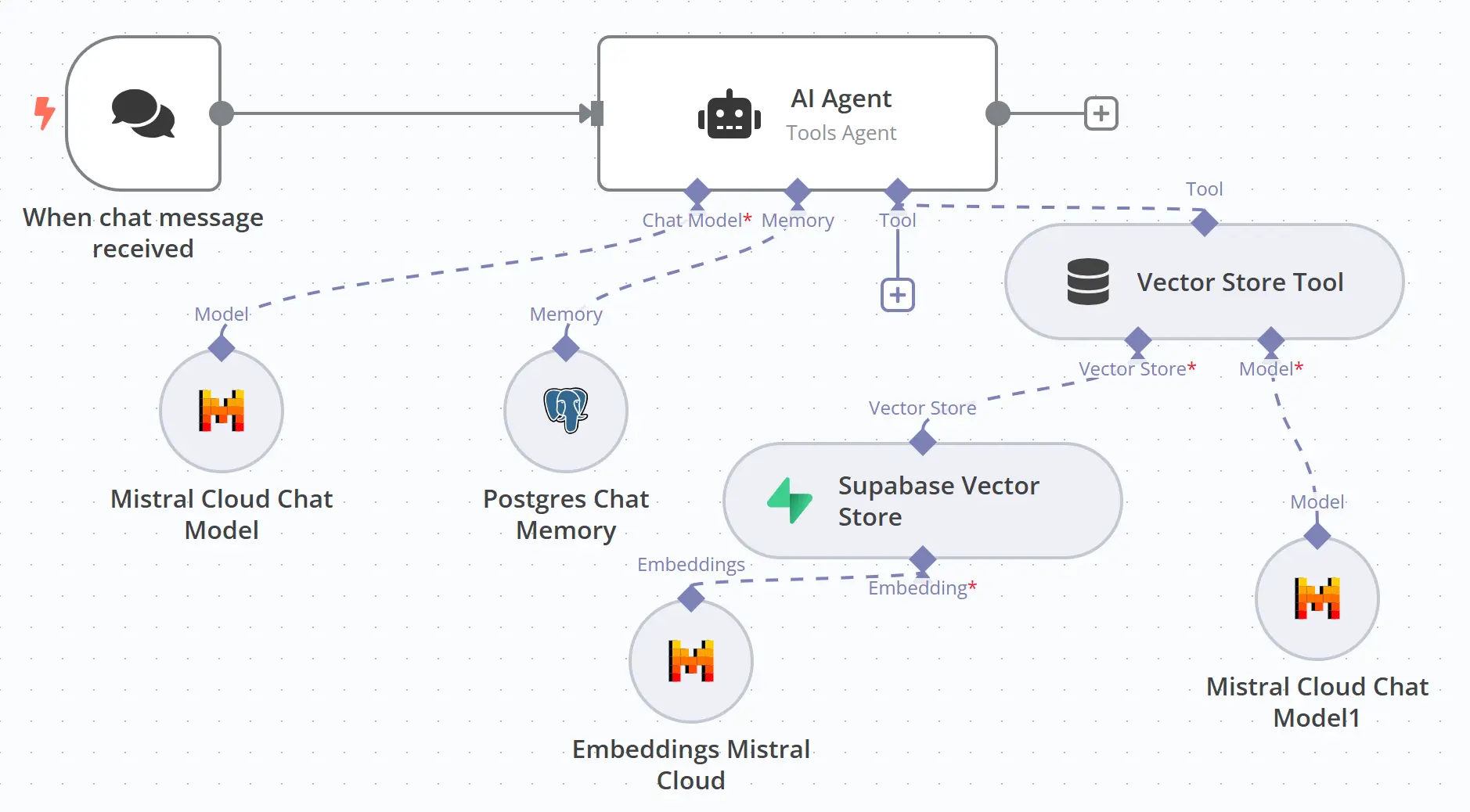

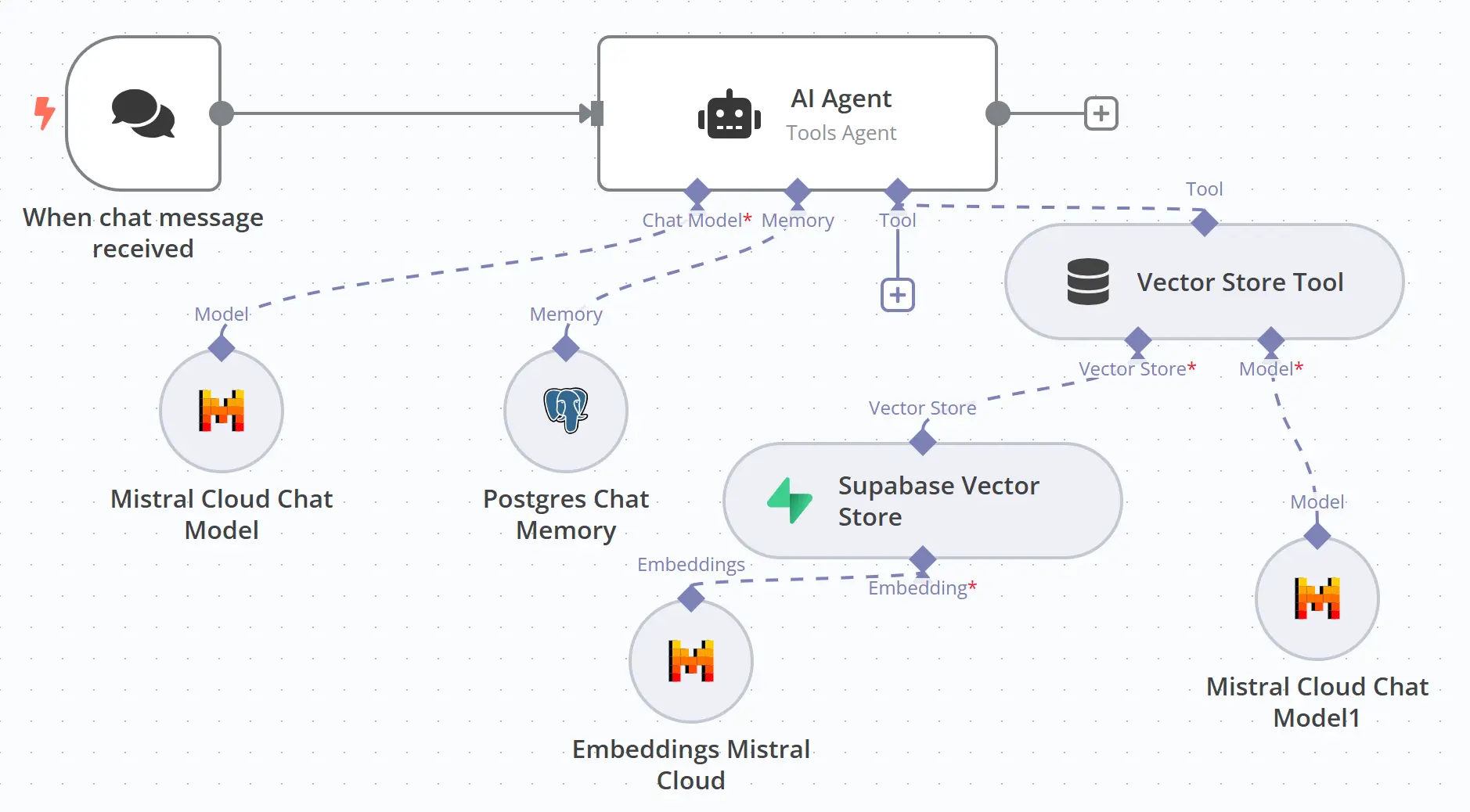

A sophisticated Retrieval-Augmented Generation (RAG) system built with n8n that enables intelligent document querying and contextual responses. This solution combines vector search, embeddings, and large language models to create a powerful knowledge retrieval system.

The Problem

Organizations struggle with:

- Accessing relevant information across large document repositories

- Maintaining context in conversations

- Providing accurate, source-based responses

- Scaling knowledge management efficiently

The Solution

We developed an advanced RAG system that:

- Processes and stores documents in a vector database (Supabase)

- Uses Mistral Cloud embeddings for semantic search

- Maintains conversation context with PostgreSQL

- Leverages LLMs for natural language understanding

- Provides source-based, contextual responses

Impact

- Reduces information retrieval time by 90%

- Ensures responses are grounded in actual documents

- Maintains conversation context for better user experience

- Scales knowledge management efficiently

Future Enhancements

- Multi-modal document support

- Advanced query optimization

- Real-time document indexing

- Enhanced security and access controls